Texture-based Dynamic Effects (EN)

(страница из оригинальной справки. NDL Gamebryo 1.1)

Dynamic Multitexture Optimization

It is a very good practice (when possible) to keep the affected nodes list as compact as possible — currently, the renderers do not cull any effects based on geometry. As a result, it is just as expensive to project a texture onto an object outside of the effect's "frustum" as it is to project the texture onto a pertinent object.

Overview

The NiDynamicEffect subclass NiTextureEffect implements projected multitexturing. These types of effects are very powerful tools for creating complex spotlights, light shafts, stained-glass window effects, reflective surfaces, dynamic shadows and other effects. The effects involve projecting a texture onto objects in the scene using a projection other than the camera view.

As NiDynamicEffect subclasses, NiTextureEffect objects include support for affected node lists and effect switches. As NiAVObjects, they can be attached to scene graph objects to simulate headlights, laser sights, and other effects.

Texture Effect Type

The texture type setting in an NiTextureEffect controls the type of visual effect the texture has upon the surface appearance of the textured objects in its affected node scope list. The possible options include:

· PROJECTED_LIGHT – The set of active projected light NiTextureEffect objects sum together along with the static DarkMap multitexture. This sum is multiplied by the base texture of the object. As a result, the overall effect can only darken the base texture, but the lights themselves sum together in a physically realistic manner. Theoretically, there is no limit to the number of projected lights that can be active on an object, but most renderers impose some form of limit (either fixed or performance limited).

· PROJECTED_SHADOW – Each projected shadow NiTextureEffect is multiplied by the base texture. As a result, each additional shadow map can only darken the base texture, and overlapping shadows will tend to darken the base object more deeply than disjoint shadows. Theoretically, there is no limit to the number of projected shadows that can be active on an object, but most renderers impose some form of limit (either fixed or performance limited).

· ENVIRONMENT_MAP – The environment map represents the mirror-like reflection of the surrounding environment in the surface of an object. The effect is added to the lit and shadowed base texture. An optional static multitexture, the GlossMap, may be specified to determine which parts of a surface are reflective. The GlossMap is not supported on all renderers. This allows complex behavior, such as a surface that is matte in some regions and shiny in others. Only one environment map at a time may be applied to a given surface.

· FOG_MAP – The fog map represents the density (in its alpha channel) and the color (in its color channel) of the fog at a given height and distance from the camera. It is blended onto the final color of the surface, and is capable of making an entire object disappear into the fog. Only one fog map at a time may be applied to a given surface.

Dynamic texture coordinate generation is the heart of the NiTextureEffect. The automatic dynamic generation of texture coordinates determines whether the effect appears to be a spotlight, a sunbeam, or the reflection of the surrounding scene. Three settings control this behavior: the texture model-space projection matrix, the model-space texture projection translation, and the texture coordinate generation type.

The texture coordinate generation type controls the basic source texture coordinates and the type of transform applied:

· WORLD_PARALLEL: This mode takes as its inputs the world-space object vertices, transforms them by the world-space projection matrix and translation, and uses the X and Y components of the output as U and V. This mode tends to create parallel projections for sunbeam/infinite/directional lighting effects.

· WORLD_PERSPECTIVE: This mode takes as its inputs the world-space object vertices, transforms them by the world-space projection matrix and translation, and uses the X/Z and Y/Z components of the output as U and V. This mode tends to create perspective transformations for spotlight or "movie projector" effects.

· SPHERE_MAP: This mode transforms camera-space object normals into texture coordinates using a spherical projection. This is used to create environment-mapping effects.

· DIFFUSE_CUBE_MAP: In this mode, texture coordinates are generated for each affected object by transforming the world-space object-normal vectors directly into the texture as a cube map – this capability is detailed in the System Details -- Texturing manual, but is generally used for diffuse environment mapping and projected lights. This mode is only supported for NiRenderedCubeMap- and NiSourceCubeMap-based NiTextureEffect objects on hardware that supports cube mapping.

· SPECULAR_CUBE_MAP: In this mode, texture coordinates are generated for each affected object by transforming the world-space reflection vectors directly into the texture as a cube map – this capability is detailed in the System Details -- Texturing manual, but is generally used for specular environment mapping and projected lights. This mode is only supported for NiRenderedCubeMap- and NiSourceCubeMap-based NiTextureEffect objects on hardware that supports cube mapping.

Note that the world transforms of an NiTextureEffect affect the generation of texture coordinates in WORLD_PARALLEL and WORLD_PERSPECTIVE mode. The specified model projection matrix and translation specify a mapping from the model-space of the NiTextureEffect into texture space. The world scale, rotate and translate transforms of the NiTextureEffect are used to transform the respective model-space transformations that map world-space vertices into texture space.

Fogging

Fog, also known as haze or "depth cueing", is conceptually related to lighting, but is functionally independent in Gamebryo. Fog is a rendering effect available on some rendering systems that causes objects to gradually fade to a single "fog color" as they get further away from the camera's position. This has the practical effect of making distant objects appear "hazy", and is often used to make a close-in far clipping plane appear less jarring (as objects will fade into invisibility instead of being abruptly clipped).

There are three modes for fog in Gamebryo; two use the classic "fog increases with distance from the camera" method of fogging, while the third allows the application to specify any fog value at any vertex. Fog is controlled in Gamebryo by the NiFogProperty rendering property described later in this chapter.

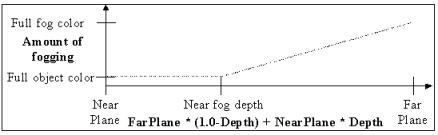

Depth-based fog in Gamebryo is modified with a single parameter that is related to the nearest distance from the camera at which objects begin to be faded to the fog color. Objects always attain the full fog color at the far plane. Currently, the Direct3D, GameCube, Xbox and PS2 renderers support fogging that is linear with respect to Z value. Several also support "range squared" fog. Please see the platform renderer documentation for more details on each renderer's capabilities. Range squared fog is more accurate (as it is dependent on view position, not orientation), but is more expensive to compute.

Note that the depth parameter is independent of actual near and far plane values. A depth value of 0.0 indicates that there is no fogging (0.0 should never actually be used as a fog depth), while a depth of 1.0 indicates that fogging should start at the near plane. Values between 0.0 and 1.0 will linearly interpolate the near fogging distance between the near and far planes.